Why CCAI Matters Now

Customer expectations are higher. Channels are many. Agents face heavy cognitive load. Budgets are tight. A good CCAI platform improves resolution speed, lifts CSAT, reduces handle time, and protects quality at scale. The wrong one adds complexity and cost. Your goal is simple: match capabilities to business outcomes, then implement in a way that sticks.

Define Outcomes Before Features

Start with measurable goals. Pick 3–5 targets that tie to real value:

- Reduce average handle time (AHT) by 10–20%.

- Increase first contact resolution (FCR) by 5–10 points.

- Deflect 15–30% of repetitive contacts to automation with no CSAT drop.

- Cut after-call work (ACW) by 30–60% via auto-summary.

- Improve QA coverage from 2–5% sampling to 90–100% AI scoring.

Translate each outcome into a metric, owner, and timeline. Features only matter if they move these numbers.

Core Capability Map (What to Look For)

1) Intelligent Self-Service & Virtual Agents

- NLU + LLM hybrid: Robust intent detection plus generative flexibility. Guardrails for accuracy.

- Omnichannel: Voice, chat, email, messaging apps, and in-app experiences.

- Task completion: APIs to check order status, process refunds, schedule appointments, reset passwords.

- Dialog design: Low-code tools, reuse of flows across channels, and version control.

- Fallback logic: graceful handoff to agents with full context and transcripts.

2) Agent Assist

- Real-time guidance: Suggested replies, knowledge surfacing, compliance prompts.

- Live transcription: Accurate, low-latency speech-to-text with speaker separation.

- Auto-wrap up: Summaries, disposition codes, and CRM notes generated instantly.

- Next-best action: Dynamic suggestions tied to product, policy, and customer data.

3) Post-Interaction Analytics & QA

- Conversation intelligence: Topics, sentiment, interruption, silence, and compliance flags.

- Automated QA: Scoring rubrics, coaching moments, calibrations with supervisors.

- Root-cause analysis: Trend detection, cost drivers, and intent drift alerts.

4) Knowledge & Content

- Retrieval-augmented generation (RAG): Answers grounded in your documents and policies.

- Versioned knowledge: Approval workflows and audit trails.

- Multilingual coverage: High-quality translation plus locale-specific content.

5) Integration & Data Fabric

- Native connectors: CRM, ticketing, UCaaS/CCaaS, order systems, payment gateways.

- Event streaming: Webhooks or Kafka for real-time data flow.

- Open APIs: For custom actions and bespoke analytics.

6) Governance, Risk, and Compliance

- PII handling: Redaction in real time and at rest. Data retention controls.

- Access controls: Role-based permissions, SSO, SCIM.

- Compliance packs: SOC 2, ISO 27001, PCI DSS for payments, HIPAA where needed.

- Explainability: Reason codes, traceable sources for AI answers.

7) Performance & Scalability

- Latency: Real-time voice under ~300 ms round-trip; chat under ~1 second.

- Concurrency: Bursting during promotions or outages without throttling.

- Resilience: Multi-region failover, uptime SLAs, RTO/RPO clarity.

The 2025 Architecture Question: LLM-Native or NLU-First?

- LLM-native platforms shine for speed of design, dynamic handling of edge cases, and natural conversation. They need strong guardrails (RAG, policies, evaluation harnesses).

- NLU-first platforms excel in structured flows, deterministic outcomes, and low variance. They can feel rigid without careful design.

Best of both: use a hybrid approach—LLM for understanding and generation; NLU/flows for critical paths like refunds, compliance workflows, and authentication.

✅ Vendor Evaluation Scorecard (Template)

| Criterion | Weight | Vendor A | Vendor B | Vendor C |

|---|---|---|---|---|

| Use-case coverage | 20% | 4.5 | 4.0 | 3.5 |

| AI quality & guardrails | 20% | 4.0 | 4.5 | 3.0 |

| Integration fit | 15% | 3.5 | 4.0 | 4.5 |

| Agent assist strength | 10% | 4.0 | 3.5 | 3.0 |

| Analytics & QA | 10% | 3.5 | 4.5 | 3.5 |

| Governance & security | 10% | 4.0 | 4.0 | 3.0 |

| Admin UX & operations | 10% | 4.5 | 3.5 | 3.5 |

| TCO & flexibility | 5% | 3.5 | 4.0 | 4.5 |

| Weighted Total | 100% | 4.1 | 4.1 | 3.6 |

Build vs. Buy vs. Blend

- Buy when you need speed, reliability, and a broad feature set.

- Build when your processes are unique, or you have strict data residency and IP needs.

- Blend for the common modern pattern: licensed CCAI core + your custom actions, prompts, and RAG layer over your knowledge and data.

Pricing Models to Expect (and How to Compare)

- Per-interaction: Pay per call/chat/minute. Simple; watch peaks.

- Per-hour/seat: For agent tools and QA analytics.

- Per-message/token: Common for LLM usage; monitor prompt/response sizes.

- Platform fees: Orchestration, governance, analytics modules.

Always calculate Total Cost of Ownership (TCO): platform + usage + integration + change management + model evaluation + ongoing optimization. Negotiate committed-use discounts and burst protection.

A Practical Evaluation Framework

Step 1: Use-Case Fit

Score each vendor (1–5) against your top use cases:

- Password resets

- Order tracking and changes

- Billing issues

- Appointment scheduling

- Warranty/returns

- Outage handling

- Onboarding and policy Q&A

Step 2: Data & Integration Fit

- CRM and ticketing fit.

- Authentication flow support (OTP, knowledge-based, OAuth).

- Back-office actions via APIs.

- Data export and warehouse integration.

$tep 3: AI Quality & Safety

- Bench test with your transcripts and FAQs.

- Hallucination rate under your threshold.

- Guardrails: prompt shields, restricted topics, and redaction.

- Offline evaluation: same prompts, repeatable outputs.

Step 4: Operations & Change

- Admin UX: is it usable by non-developers?

- Versioning, A/B testing, and rollback.

- Monitoring: real-time dashboards and alerting.

- Coaching workflow for supervisors.

Step 5: Commercials & Risk

- Transparent pricing and clear SLAs.

- Security posture and compliance evidence.

- Exit plan: data portability and deprovisioning.

✅ KPI Tracking Cadence

| Frequency | Metrics to Monitor |

|---|---|

| Weekly | AHT, deflection, FCR, CSAT, automation escalations, QA scores, compliance flags. |

| Monthly | Cost per contact, agent productivity, coaching impact, knowledge freshness. |

| Quarterly | Model evaluation, incident reviews, ROI check, roadmap resets. |

Must-Ask Vendor Questions (and Why They Matter)

- How do you ground generative answers in our content?

Look for RAG with source citations and fallbacks. - What’s your strategy for sensitive data?

Real-time PII redaction, data boundaries, and tenant isolation. - How do we evaluate and tune the model?

Expect offline test harnesses, golden test sets, and prompt libraries. - What happens during outages and spikes?

Ask for capacity planning, auto-scaling, and failover details. - Can supervisors change prompts and flows safely?

You need versioning, approvals, and rollback. - How do you measure and improve intent coverage over time?

Intent drift detection, auto-clustering, and retraining playbooks. - What are my exit options?

Data export formats, transcript ownership, and migration support.

Security, Privacy, and Compliance Checklist

- PII redaction: Before storage and analytics.

- Encryption: In transit and at rest; KMS control if possible.

- Access control: SSO, MFA, role-based permissions, least-privilege APIs.

- Auditability: Logs for changes, prompts, and model versions.

- Data residency: Regions that match your legal needs.

- Model isolation: Vendor should not train on your data by default.

- Payment flows: PCI DSS scope isolation for IVR payments.

Multilingual and Localization

- Support for your key languages with measured accuracy.

- Fine-tuned or adaptive ASR for dialects and accents.

- Locale-aware formatting for dates, currencies, and units.

- Local policy content variations, not just translation.

Human Design Still Wins: Conversation & UX

- Clear turn-taking. Avoid over-talk in voice.

- Natural confirmations. No rigid scripts.

- Short replies, progressive disclosure.

- Easy escape hatches to humans.

- Honest boundaries: “I can’t do X yet, but here’s what I can do.”

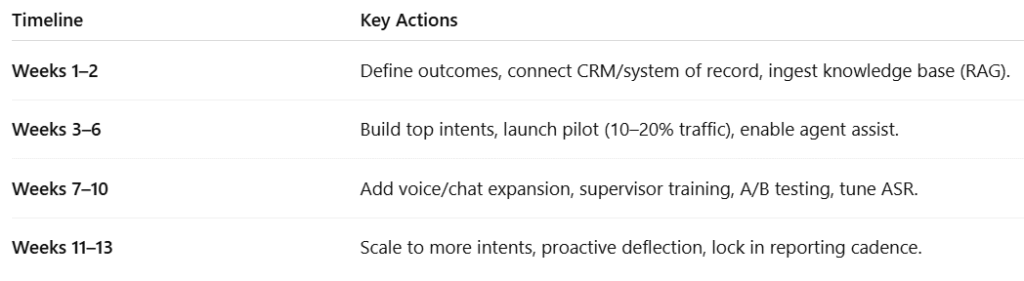

Implementation Roadmap (90 Days)

1.Weeks 1–2: Foundations

- Finalize outcomes and metrics.

- Prioritize 3–4 high-volume intents.

- Connect CRM and one key system of record.

- Ingest knowledge base; enable RAG.

2.Weeks 3–6: Design & Pilot

- Build flows for top intents with guardrails.

- Launch to 10–20% traffic on one channel.

- Turn on agent assist for a single team.

- Start auto-QA on 100% of calls/chats.

3.Weeks 7–10: Expand & Optimize

- Add voice if you started with chat (or vice versa).

- Train supervisors on coaching workflows.

- A/B test prompts and knowledge sources.

- Tune ASR and barge-in for voice.

4.Weeks 11–13: Scale

- Add 5–10 more intents.

- Introduce proactive deflection in web/app.

- Lock in reporting cadence with business owners.

KPIs and Governance Cadence

- Weekly: AHT, deflection rate, FCR, containment, CSAT by intent, automation-related escalations, QA scores, compliance flags.

- Monthly: Cost per contact, agent productivity, coaching impact, knowledge freshness, top emergent topics.

- Quarterly: Model evaluation results, incident reviews, roadmap resets, ROI check.

Create a standing AI Council: operations lead, CX owner, compliance, data engineering, and a product manager. Keep decisions documented.

Common Pitfalls (and How to Avoid Them)

- Starting too broad: Begin with 3–4 intents that represent 30–40% of volume.

- Ignoring data quality: Bad knowledge yields bad answers. Assign owners and SLAs for updates.

- No change management: Train agents and supervisors. Celebrate quick wins.

- Measuring vanity metrics: Track outcomes, not just containment. Watch CSAT and recontact.

- Underestimating voice complexity: Test ASR with real accents, noise, and devices.

- Skipping an exit plan: Keep your prompts, flows, rubrics, and test sets portable.

Light RFP Outline (Copy/Paste)

Section A: Company & Security

- Corporate background, references, uptime history

- Security standards, data residency options, audit reports

Section B: Capabilities

- Virtual agent (voice/chat), agent assist, auto-QA

- Knowledge/RAG approach, multilingual support

- Analytics, dashboards, alerting

$ection C: Integrations

- CRM/ticketing, telephony/CCaaS, data warehouse

- Custom action framework and API limits

Section D: Performance

- Latency targets, concurrency limits, autoscaling, failover

Section E: Operations

- Admin UX, roles/permissions, versioning, A/B testing

- Monitoring, incident response, support SLAs

$ection F: Commercials

- Pricing structure, committed-use discounts

- Professional services, training, success plan

- Termination and data export

Quick Buyer’s Cheat Sheet

Choose this vendor if you need:

- Fast time-to-value: Strong out-of-the-box intents, clean admin UI, many native connectors.

- Deep control: Rich APIs, prompt ops, evaluation tooling, and custom actions.

- Heavy analytics/QA: Conversation intelligence + calibrated QA and coaching.

- Voice-first excellence: Low-latency ASR/TTS, silence handling, and barge-in tuning.

- Strict compliance: Proven redaction, model isolation, and data residency options.

Walk away if:

- Demos look great but pilots fail your metrics.

- Pricing is opaque or spikes with burst usage.

- There’s no RAG or guardrails for generative answers.

- You can’t export your data and artifacts.

Final Thoughts

In 2025, the best CCAI choice is not the one with the longest feature list. It’s the platform that helps your team solve the highest-value problems quickly and safely—and keeps improving with you. Anchor every decision to business outcomes, insist on measurable pilots, and invest in the human workflows that turn AI into durable CX gains.